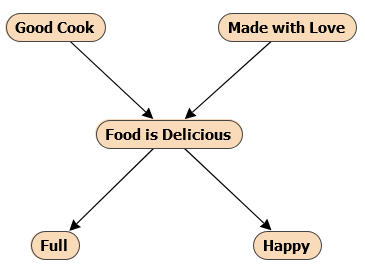

A Bayesian network is traditionally defined as a directed acyclic graph (DAG), made up of nodes and arrows, coupled with discrete conditional probability tables (CPTs). If an arrow starts at one node and points to another, the first node is called the parent, and the second the child — but only for that arrow. Each node represents a random variable (that is, a variable that can be in any of its states with some probability), and the CPT for that node gives that variable’s probability distribution given any combination of states you may choose for the parents. Here’s a simple example DAG for a Bayesian network:

And here is an example CPT from that network:

Read the above table left to right. If Food_is_Delicious is yes, then there is a .95 chance that Happy is yes (and .05 chance that Happy is no). If Food_is_Delicious is no, then there is a .3 chance that Happy is yes (and .7 chance that Happy is no). In simpler terms, if the Food is Delicious, then you have a 95% chance of being Happy; if not, then you only have a 30% chance of being Happy.*

This network is also causal. That means that each arc should be interpreted as saying that the parent causes the child. And what does “causes” mean? Keeping things as simple as possible, it means that if in the real world you could somehow manipulate the parent, the probability distribution over the child will change. Even if it seems like it would require magic to change the parent, that’s OK — but the change in the probability distribution over the child must not be magic. The magic needed to change a parent is called an intervention and we typically say we intervene on a node to see the causal effects. In this case, intervening on the Deliciousness of the Food would then (non-magically) change the chances of being Full and Happy. Intervening on Happiness wouldn’t lead to any change in the Deliciousness of the Food.

Bayesian networks don’t have to be causal. Here’s an example which isn’t:

If we interpreted this as causal, it would suggest that intervening on the Deliciousness of the Food would somehow lead to a Good Cook who made the food in the first place. That, of course, doesn’t make any sense. Nonetheless, some of the arrows still happen to be causal — those are the ones that haven’t changed from the first network, namely the arrows that point from Food is Delicious to Full and Happy. That’s not intentional.

This can get tricky, but generally, a Bayesian network is called causal if whoever uses it intends to use it causally. I’m not using this second one causally. If I were, then it would be a causal Bayesian network with half of the arrows incorrect. That may or may not be OK (just like any error), depending on what you’re doing. In fact, it’s also totally OK to have a partly causal Bayesian network — just put a note on the arrows that aren’t causal, so that you know how to step over them when you’re trying to work out the causal effects — like if you’re trying to work out if intervening on Made with Love can lead to you being Full, or vice versa.

* I’ll use bold to refer to a node/variable or one of its states. In text descriptions of Bayesian networks, I will freely switch between any functionally equivalent version of the variable name, such as Food is Delicious and Deliciousness of the Food, no and not, etc.